Overview

Docker has revolutionized the way we build, ship, and run applications. In this article, we'll delve into the fundamental aspects of Docker, exploring its purpose, benefits, security considerations, essential tools, and associated terms.

What is Docker?

Docker is an open-source platform for developing, shipping, and running applications.

Docker provides the ability to package and run an application in a loosely isolated environment called a container. The isolation and security let you run many containers simultaneously on a given host. Containers are lightweight and contain everything needed to run the application, so you don't need to rely on what's installed on the host.

Ultimately, Docker provides a consistent environment across different infrastructures, making developing, testing, and deploying software applications easier.

What is the Purpose of Docker?

The primary purpose of Docker is to simplify the software development and deployment process.

Docker eliminates the "it works on my machine" problem by "containerizing" applications, ensuring you can ship, test, and deploy your application in any environment without worrying about incompatibility issues, regardless of the underlying machine's configuration settings.

It works on my machine...

Tools and Terms

To fully understand Docker, you must familiarize yourself with key tools and terms.

- Dockerfile: A Dockerfile is a text file that contains instructions for building a Docker image. It specifies the base image, environment variables, and commands to run during image creation.

- Images: Docker images are read-only templates that contain instructions for creating a container. A Docker image is a snapshot or blueprint of the code, runtime, configurations, libraries, and dependencies required inside a container for an application to run.

- Containers: Docker containers are lightweight, portable, and self-sufficient execution environments that run isolated applications. They encapsulate an application and its dependencies, allowing it to run consistently across different environments.

- Docker Registry: A Docker Registry is a storage and distribution service for Docker images. It allows users to upload, store, and share Docker images privately or publicly. DockerHub is a popular example of a Docker Registry.

- Docker Hub: Docker Hub is a cloud-based registry service provided by Docker that hosts a vast collection of public and private Docker images. It allows users to share, distribute, and collaborate on containerized applications and services.

- Docker Desktop: Docker Desktop is a desktop application with an easy-to-use interface for building, running, and managing Docker containers on Windows and macOS operating systems. It includes the Docker Engine, CLI tools, and other utilities.

- Docker Compose: Docker Compose is a tool for defining and running multi-container Docker applications. It uses a YAML file to configure the services, networks, and volumes required for a multi-container application and simplifies the process of managing complex Docker deployments.

- Kubernetes: Kubernetes is an open-source container orchestration platform for automating containerized applications' deployment, scaling, and management. It provides features such as service discovery, load balancing, auto-scaling, and rolling updates to ensure the reliability and scalability of applications running in containers.

How Does Docker Work?

Docker combines virtualization and container technology to provide an isolated sandbox environment. This environment facilitates the creation of lightweight containers, streamlining the application development and deployment processes.

Architecture

Docker uses a client-server architecture.

The Docker client talks to the Docker daemon, which does the heavy lifting of building and running your Docker containers. The Docker client and daemon can run on the same or different system. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface.

A Docker registry is a storage and distribution service for Docker images. It is a centralized repository where Docker images can be uploaded, stored, managed, and shared. Docker registries enable developers to publish their images privately or publicly, allowing others to access and deploy them in their environments.

- Docker Daemon - The Docker daemon (

dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. - Docker Client - The Docker client (

dockercommand) is the primary way users interact with Docker. When a user types a command likedocker run, the client sends these commands to the Docker Daemon (dockerd) to be executed. - Docker Registries - Docker registries store Docker images (Docker Hub is the most popular public registry). When a user uses the

docker pullordocker runcommand, Docker pulls the required image(s) from a configured registry. Usingdocker pushwill push an image to the configured registry.

Docker Architecture

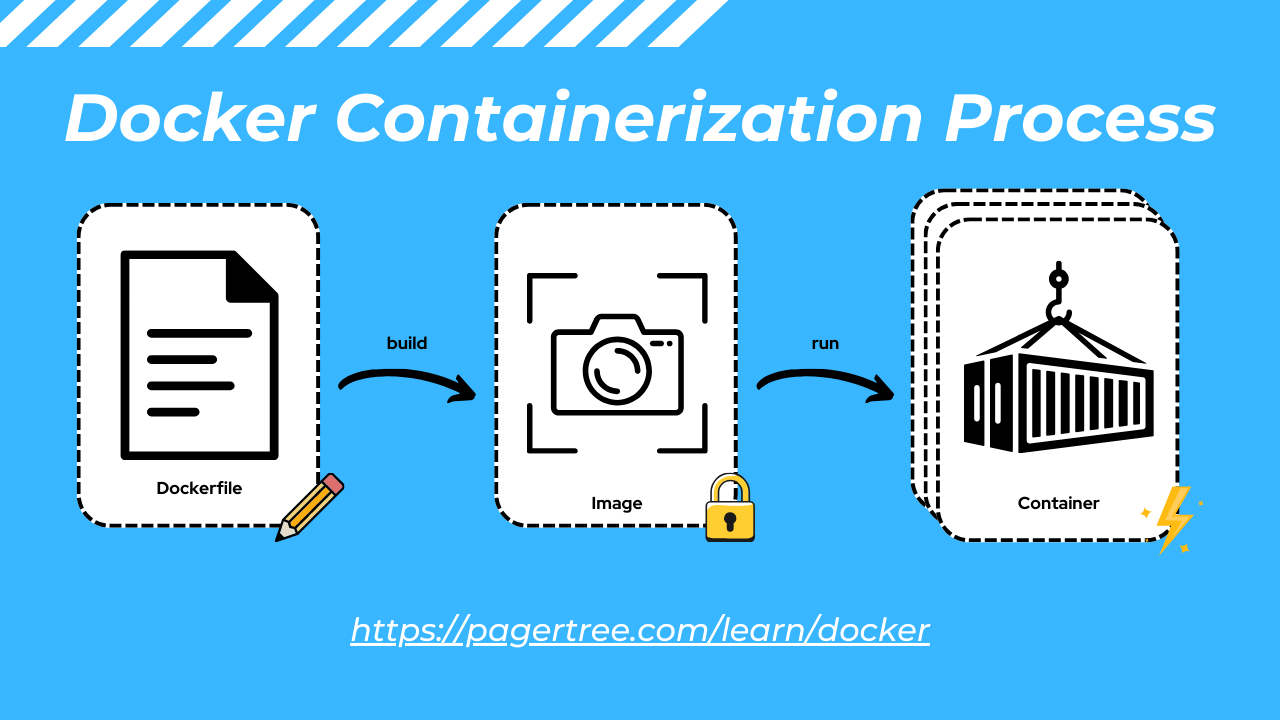

Containerization Process

Docker simplifies the process of packaging, deploying, and running applications through a streamlined workflow that begins with a Dockerfile and culminates in a running container. Let's explore each step of this process in detail:

- Dockerfile - A Dockerfile serves as the recipe for building a Docker image. It contains instructions that specify how to construct the image, including the base image to use, environment variables, dependencies to install, and commands to execute. The Dockerfile provides a declarative, version-controlled blueprint for creating consistent and reproducible images across different environments.

- Build Image - Once a Dockerfile is created, the next step is to build the Docker image using the

docker buildcommand. This command reads the instructions in the Dockerfile and executes them sequentially, layer by layer, to construct the image. Each instruction in the Dockerfile creates a new image layer, which is cached for subsequent builds to improve build performance.

During the build process, Docker pulls the necessary base image layers from a registry (e.g., Docker Hub) and executes the instructions in the Dockerfile to customize the image. Once the build is complete, Docker generates a new image with a unique identifier known as a digest. - Run Container - With the Docker image built, the final step is to run a container based on that image using the

docker runcommand. This command creates a new container instance from the specified image and starts it according to the configuration defined in the Dockerfile.

When running a container, Docker provisions resources such as CPU, memory, and network interfaces based on the container's configuration. The containerized application runs within this isolated environment, leveraging the dependencies and runtime environment specified in the Docker image.

Once the container runs, it can be managed, monitored, and scaled using Docker's command-line interface (CLI) or container orchestration tools such as Docker Swarm or Kubernetes.

Docker Containerization Process - Dockerfile, Image, Container

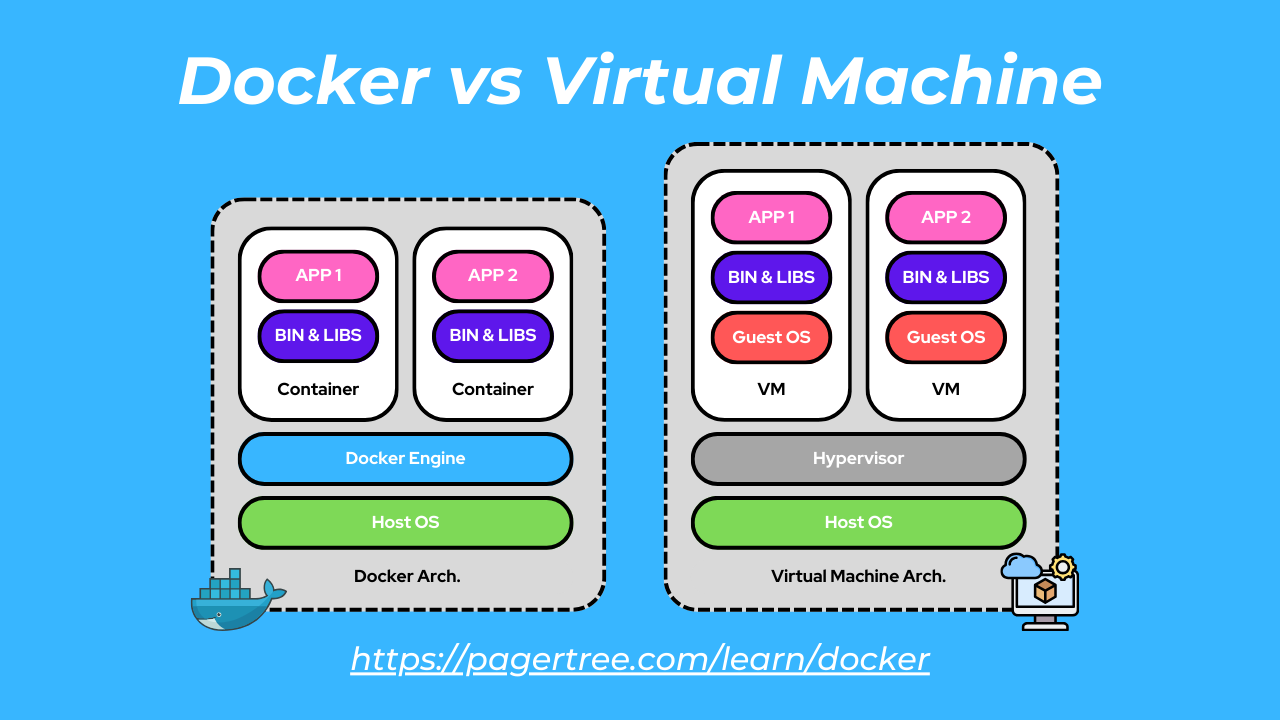

Docker vs Virtual Machines

Containers and virtual machines have similar resource isolation and allocation benefits but function differently because containers virtualize the operating system (OS) instead of the hardware. Containers are more portable and efficient.

- Containers: Containers share the host operating system's (OS) kernel and are lightweight, with minimal overhead. They provide fast startup times and efficient resource utilization.

- Virtual Machines: Virtual machines run on top of a hypervisor and have their own guest operating system. They are heavier than containers, with higher resource overhead and slower startup times.

Docker vs. Virtual Machine

Frequently Asked Questions

What are the Benefits of Docker?

- Portability: Docker containers can run on any platform that supports Docker, providing consistency across environments.

- Scalability: Docker enables horizontal scaling, allowing applications to handle increased workloads by adding more containers.

- Security: Docker containers are isolated from each other and the host machine, making them very secure.

- Efficiency: Docker containers share the host operating system's kernel, resulting in lower overhead and faster startup times than traditional virtual machines (see Docker vs VMs section below).

- Isolation: Containers isolate applications and their dependencies, preventing conflicts and ensuring reproducibility.

- Consistency: Docker streamlines the CI/CD process, reducing the likelihood of deployment errors caused by environment differences.

- Version Control: Docker images can be version-controlled, enabling developers to track changes and roll back to previous versions if needed.

Why Use Docker?

Docker allows you to ship, test, and deploy your applications in any environment without worrying about incompatibility issues, regardless of the machine's configuration settings.

Docker simplifies the software development lifecycle by streamlining the process of building, shipping, and running applications. It improves productivity, accelerates time to market, and enhances collaboration among development teams.

When Use Docker?

Docker is suitable for a wide range of use cases, including:

- Developing and testing applications in isolated environments.

- Continuous integration and continuous delivery (CI/CD) pipelines.

- Scaling applications horizontally to handle varying workloads.

- Containerizing legacy applications for modernization and portability.

Is Docker Secure?

Security is a top priority for Docker, and the platform offers several features to ensure the integrity and isolation of containers. These include:

- Namespaces and Control Groups: Docker utilizes Linux kernel features such as namespaces and control groups (cGroups) to isolate containers from each other and the host system.

- Image Signing and Verification: Docker supports image signing and verification to ensure that only trusted images are used in production environments.

- Security Scanning: Tools like Snyk can be used for automated vulnerability scanning to identify container image vulnerabilities and provide remediation recommendations.

- Role-Based Access Control (RBAC): Docker Enterprise Edition offers RBAC capabilities to control access to Docker resources based on user roles and permissions.